Tesla Full Self-Driving

The car

We got a Model Y a month ago. So far, we've loved the vehicle. Massive upgrade from our 2007 RAV4. At-home electric charging is a complete game changer. During the workday, Jessica uses 10-20% of the battery driving 30-60 miles between patients, and we recharge it all over night.

Self-driving

In late 2015, Elon thought autonomy would be solved by 2018. That didn't turn out to be the case! But in 2024, we're actually extremely close. Waymo is doing a fully autonomous taxi service in San Francisco using more traditional lidar techniques. Tesla vision-only FSD is very good. It's not yet perfect, but I think totally human-level is now actually in sight.

The main improvement was switching from more hard-coded self-driving logic to an "end-to-end" neural network, where the network is just trained on human driving data. I have some questions about whether the end-to-end network is truly end-to-end or still contains some hard-coding in a few places.

This post is mainly an analysis of where it's currently not as good as a human.

Planning early lane changes

A common one we hit in traffic-laden Seattle is certain highway entrances and exits will back up with traffic for a quarter mile. You have to merge very early to get in this line. FSD doesn't have this local knowledge and apparently can't yet reason about seeing a large line of cars in the lane it will need to merge into it and consider merging early.

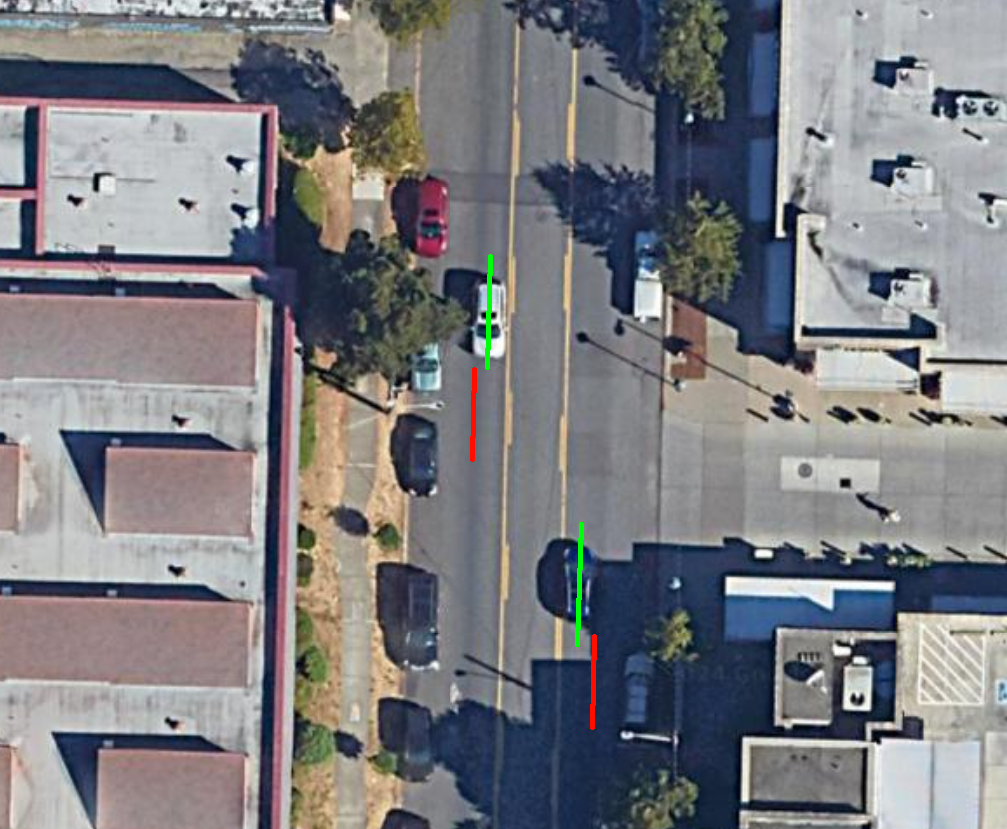

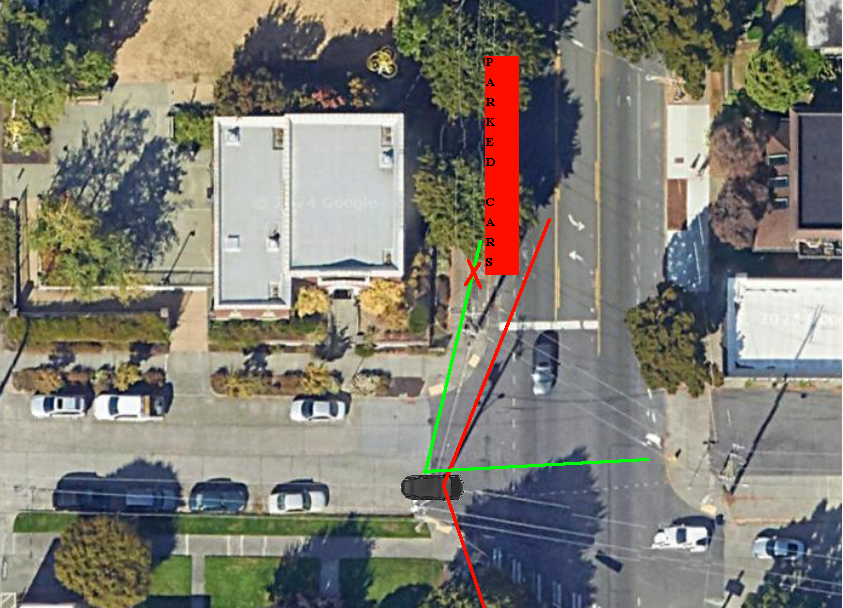

Here's one on Mercer Street in Seattle:

In the photo, traffic backs up on the I-5 entrance ramp and into the right lane on the red line. FSD doesn't consider merging until around the green box, but it's totally boxed out by a wall of cars. You can't stop to try to squeeze in without causing a huge traffic jam.

This basic pattern repeats all over the place where lines of cars form to make some entrance/exit, and FSD doesn't know it needs to get in line early.

I think to solve this, Tesla needs to include a few frames of historically successful navigation of that route segment into the context window of the neural network.

That is, the FSD neural network takes in the last N seconds of driving data to decide what to do next. I think it needs to also take in just a few frames of a past-successful navigation of the route segment about to be taken, ideally at a similar time of day, so it can "remember" it needs to merge early.

This is basically like Retrieval Augmented Generation (RAG) for self-driving. It's probably infeasible for a neural network as small as FSD (low single-digit billions of parameters) to memorize locale-specific knowledge like this, so you need a RAG-like approach instead.

The retrieved frames can probably be quite low resolution, both temporally and in terms of pixels. 1 frame per few seconds and 256x256 pixels is probably fine. You don't need that much information from them, just the basic trajectory to take.

Inhuman stop sign behavior

I think this one is due the NHTSA forcing Tesla to ban any form of rolling stop. I recall, but can't find the video of, Elon saying they had to mine through the training data to find people who actually come to a complete, 100%, 0mph stop at stop signs, and only around 0.5% of drivers actually do it consistently.

If you sit by a stop sign and watch, you'll see this clearly. Here's a video of someone doing this. None of the cars actually come to a true stop (watch the spokes of the wheels).

So, Tesla has mined their data and only trained on stop sign data from these 0.5% of drivers, or, more likely to me, put in some hard-coded behavior or simulated data. Worse than the full stop, though, is stopping right at the sign instead of where most humans stop.

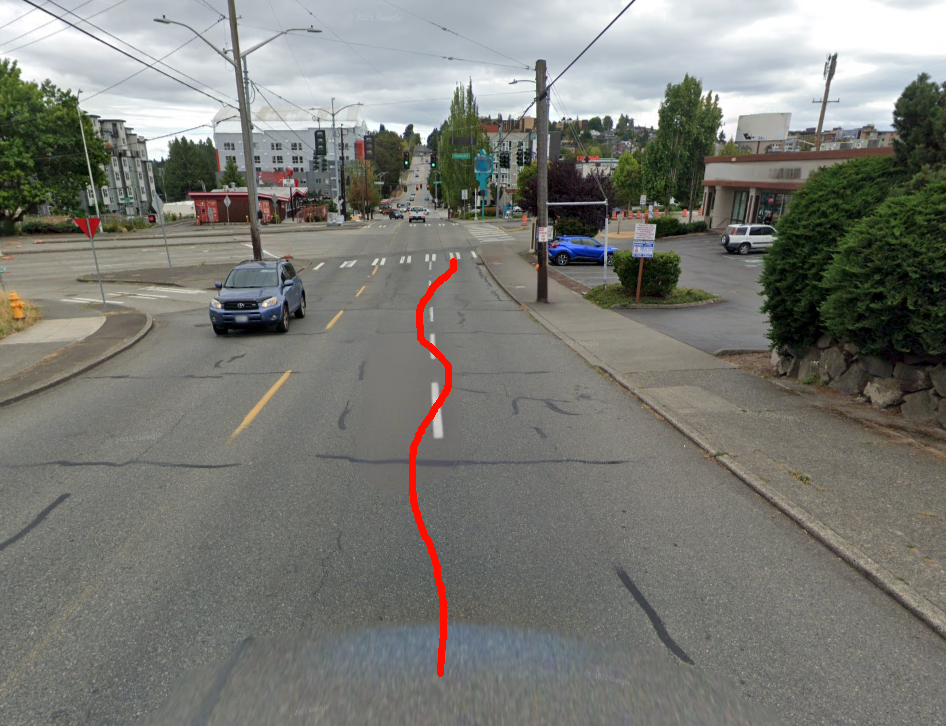

Here's an example:

This intersection is in my neighborhood. The red line is where the stop sign actually is. The green line is where most humans stop. FSD stops at the red line, and then slowwwwly creeps forward to the green line, stops again, then goes.

This may be technically to the letter of the law, and Tesla's hands may be tied by the NHTSA here, but it's inhuman and actually increases danger because humans aren't accustomed to it. Stopping at the green line is fine.

Inhuman lane centering

FSD stays centered in the lane in places humans do not. I suspect this is due to some artificial training data or some other heuristic — a real end-to-end model trained on human data wouldn't behave this way.

Here's an example:

We have a 5 lane road with 2 parking lanes, 2 travel lanes, and 1 center turn lane.

As you can see, both moving cars are biased towards the center turn lane to give the parked cars more space — you never know when someone is going to open a door or something. It's a lot more comfortable to drive near the center like this, everyone does it subconsciously.

FSD drives in the center of the lane. Compare the red line (FSD) to the green line (human). I didn't realize how much humans do this until I disengaged FSD on this road due to being uncomfortable with how little gap was between us and the parked cars.

I'm more comfortable with this now and use FSD on this road, but it's still inhuman and not as chill as I'd like.

This behavior is a little scarier on curved highway entrance/exit ramps with no shoulder where humans bias towards the inside edge of the curve, keeping more distance between the car and the outer wall. FSD will happily drive much closer to the outside wall, like the red line here:

I take over on these sections because it would be very bad if FSD decided to disengage for some reason, the curve would make the car would go towards the wall without much time to react. If I had a guarantee that FSD wouldn't disengage on these sections for any reason, I would be more willing to leave it on, despite the inhuman lane centering.

Lane indecision

This is one is usually more annoying and funny than unsafe, but FSD will occasionally find itself in the middle of a lane line and do a little zig-zag, unable to decide which lane to be in. I feel like this has got to be caused by one of:

- Some dumb hard-coded heuristic going wrong

- Camera resolution means sometimes the network can barely see something that makes it lean one way, and then the next frame it can't quite see it so leans the other way, etc

- ???

It's super inhuman and doesn't make me think end-to-end neural network. Virtually no training data has this behavior.

For example, there is a road near us that goes from 1 lanes to 2 right before a stop light. FSD has trouble deciding which lane to get into, especially when the light is red. It'll often take a path like this:

When the light is red, sometimes it doesn't even wind up fully inside its final lane before getting to the stop line.

This is probably the most obviously bad behavior of the current version of FSD. The others are at least somewhat understandable if you know a little about how the tech works.

Insufficient camera angles

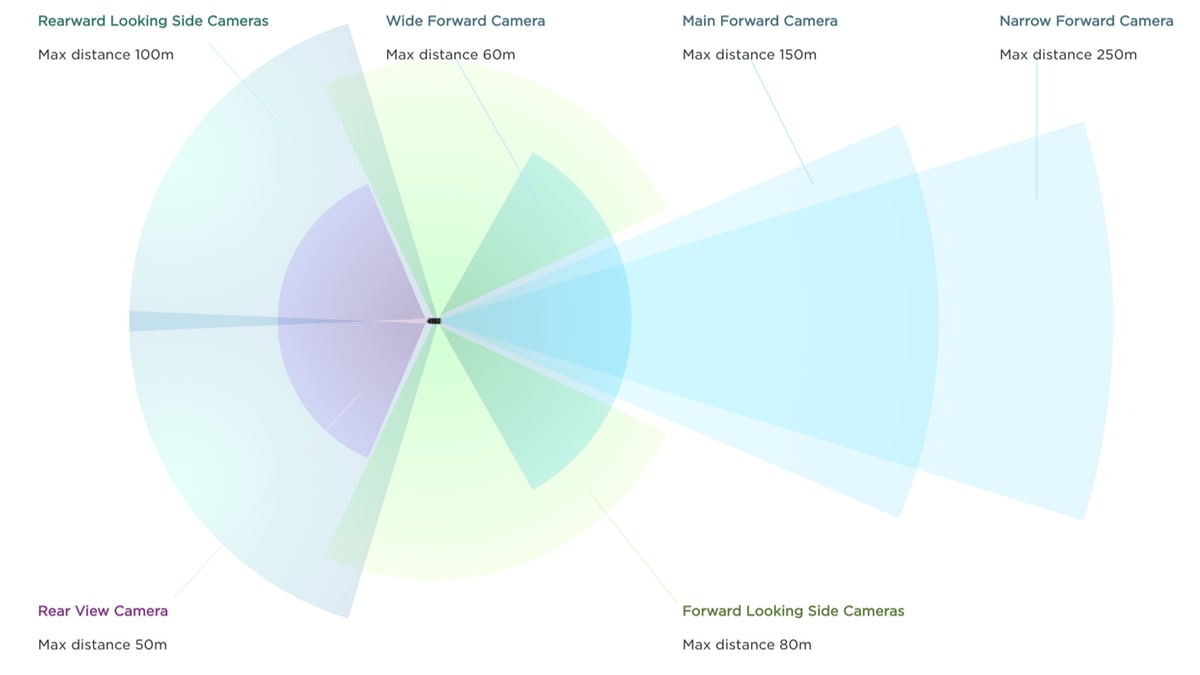

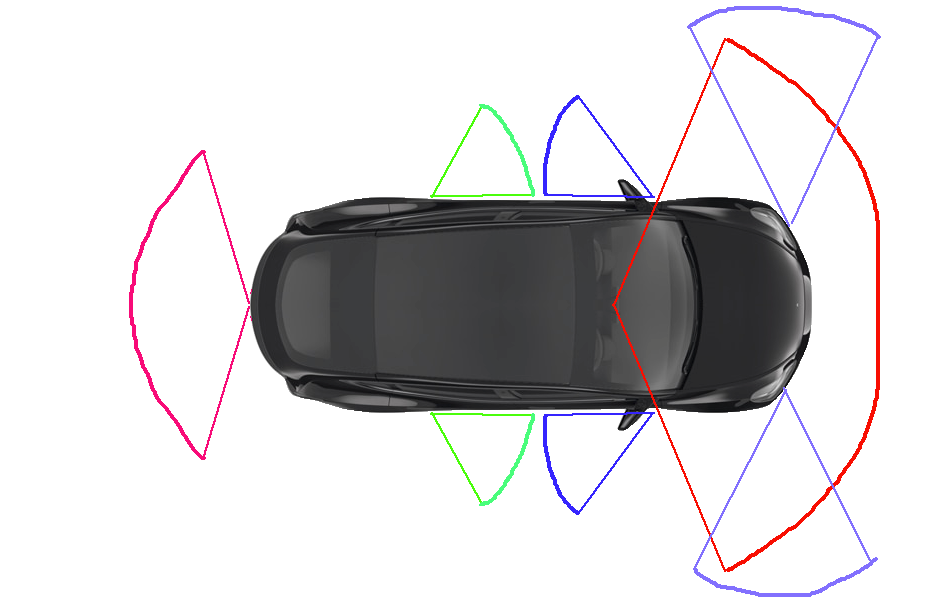

Here are the fields of view of the cameras on a Tesla Model Y:

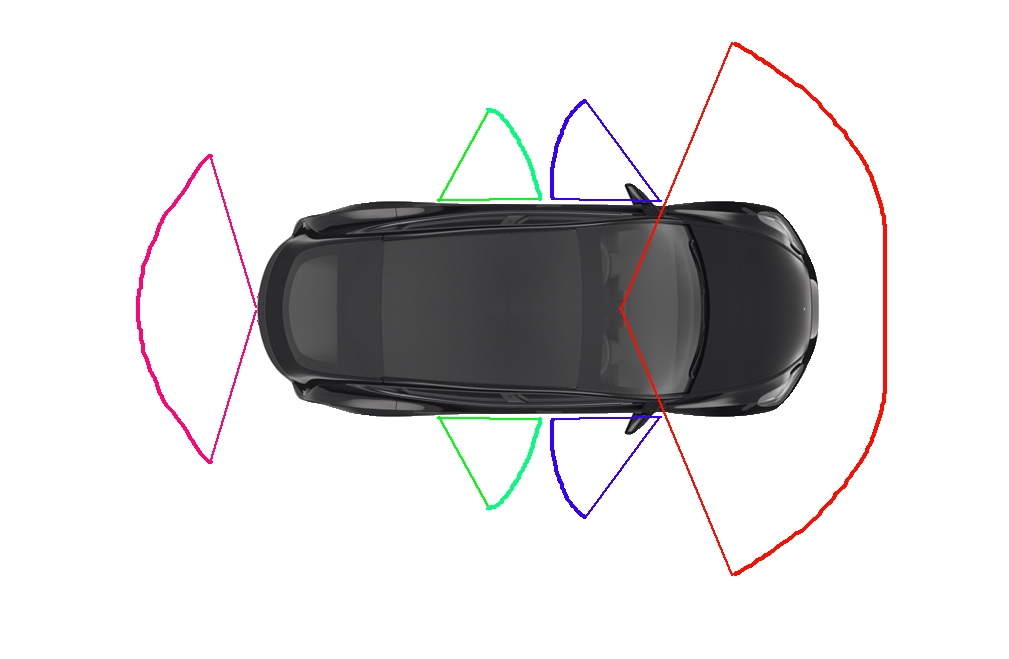

This looks pretty good! 360 degree coverage! But if you zoom into the car and actually see where the cameras are, it's more like this (excuse the crappy MS Paint drawing):

This is problematic in situations like this:

The parked cars significantly reduce visibility for oncoming traffic. The only camera with a view of oncoming traffic behind the parked cars is the forward facing side camera, but I believe it's more of a fisheye lens and I don't think it has enough acuity to look through the windows of the parked cars to detect oncoming traffic (or the neural network hasn't been trained on enough of this sort of data).

You really want wide side camera on the front corners of the vehicle looking out sideways, like a hammerhead shark, so the car can just peek out the nose in low-visibility situations like this. See the purple lines in this adjusted FOV image:

These cameras are pretty uniquely only needed for tight city driving with lots of occlusions caused by parked cars and other things.

It's honestly bad road decision that Seattle allows parked cars in places like this, because it's problematic for human drivers too.

There is probably a technique the the cars could do where it pulls forward and right, allowing its rear left side camera to get a peek to the left, but this would be pretty inhuman and freaky if you didn't understand why it was doing it.

Theory of mind in single-lane negotiation

Seattle has a lot of residential streets that have parking on both sides. When these were built in the 40s, cars were narrower, so they probably permitted travel in both directions. But with modern wide cars, there is only enough room for one car to drive in the middle. But the streets are still 2-way.

Here's an example:

You're driving on this road and another car turns into the other end. You're staring each other down. You both look at all the available pull-over spots. The best spot is marked by the green line: pull over in front of of the black truck. Since that spot is on the right, I should keep going forward while the car that just turned in will stop and wait for me to pull over into the green slot, then they will pass, then I will proceed.

This requires a lot of theory of mind and local knowledge! FSD is currently not great at this situation and mostly just freezes, but I think a bigger neural net and more training data could fix it.

Conclusion

It seems likely that Tesla will have a much better camera suite on their upcoming robotaxi vehicle that overcomes the blind spots, and I assume the software will rapidly approach human level for other situations over the next year or two. It's a bright future for self driving!

I'll continue to update this article as I find more failure modes.