This post contains the background needed to start building high-performance filesystems.

Filesystem means different things to different people. Most people interpret filesystem as POSIX filesystem — i.e. a thing with files, permissions, directories, etc.

To simplify this post, let's consider a much less complex API:

put(filename, data)

get(filename) → data

delete(filename)

list_from(start) → filenames

In practice, put doesn't take the data as a literal chunk of data, but instead takes a stream of data. Similarly, get and list_from return streams of data.

This interface is general enough that you can implement a POSIX filesystem as a layer on top of it if you wanted, or you could extend this interface with whatever features you want.

If you want to a build a high-performance filesystem, the most important thing is to understand how the underlying storage media works.

In this post we'll talk about HDDs and SSDs, but there's some carryover that can apply to more exotic media like tape drives as well.

Hard disk drives, HDDs, are your "traditional" storage technology. While they're being rapidly replaced by SSDs in consumer use cases (e.g. laptops), they are ~10x cheaper per terabyte than SSDs, so still dominate bulk storage.

The most important thing to know about hard drives are their performance characteristics. These characteristics directly flow from the hardware, so let's take a look at the hardware.

The main thing that jumps out are the stack of platters, and the arm reaching out over them. A lot of people thing of a hard drive like a vinyl record, but it's actually a stack of them, and there is data stored on both sides of each platter.

The arm is actually a stack of arms that simultaneously read and write to all the platters. Multiple platters increase storage capacity and throughput via parallelism. Modern (circa 2024) disks have in the realm of 10 platters.

Note that while there are 10 arms for 10 platters, there is only 1 actuator. All the arms are connected and do not move independently of each other. So a simplified mental model is that there is just 1 platter, but it magically has 10 times higher storage density and throughput.

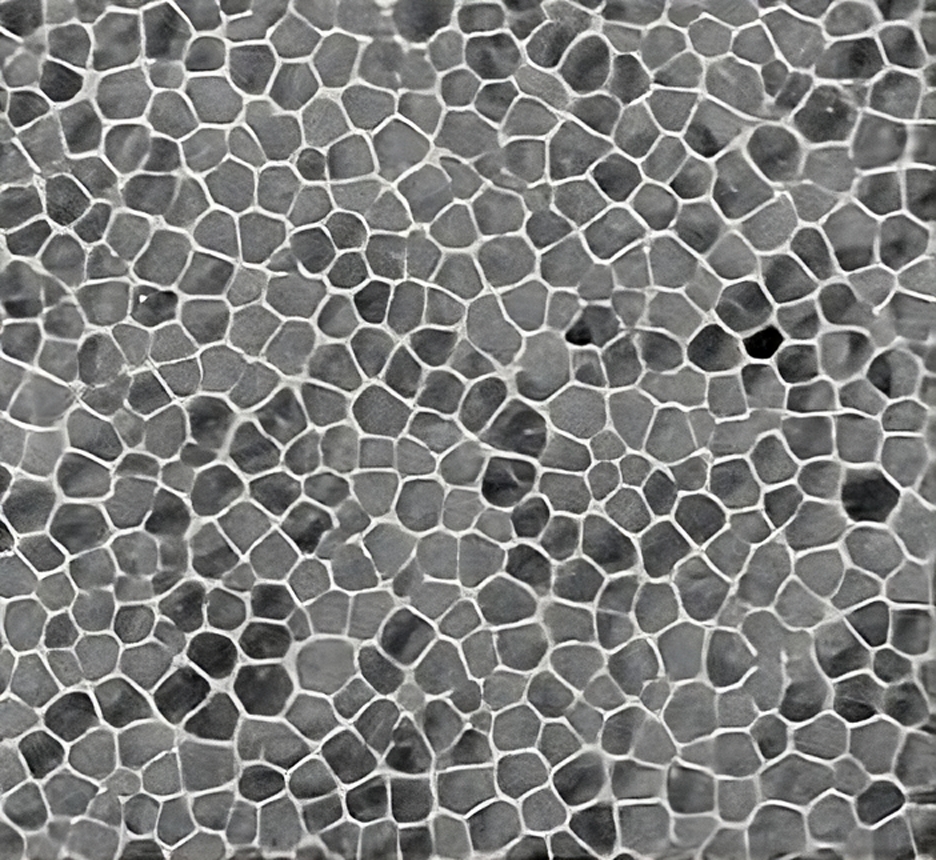

At a microscopic level, HDDs look like this:

The platter is a glass or ceramic material coated in a layer of magnetic grains. The grains are around 5—10 nanometers in scale. Each bit is about 10 grains.

This might be surprising to you! You might have been expecting something a little more structured. But in reality the actuator just has enough accuracy to return to the same tiny location on the disk, and that location represents a bit. To set the bit, the head just blasts the magnetic field up or down for 0 or 1. Reading a bit is just reading the field of those grains.

It's under-appreciated how much insane engineering goes into hard drives. The head is designed a little like an airplane wing, using the air dragged the spinning platter to generate just enough lift to float a nanometer off the surface.

If you were to scale up a bit to the size of a blade of grass, this would be like a 747 aircraft flying at 1,000,000 kilometers per hour, 10 centimeters above the ground, counting the blades of grass as they pass — and only making a mistake every billion kilometers!

HDDs have gotten more storage capacity over the years mainly by stacking more platters, and packing in more grains.

The limiting factor to stacking more platters is actually aerodynamics. If you make them too thin, they start to flutter due to environmental vibrations and interactions with the air. If they flutter, they can collide with the head and damage the platter. Some modern hard drives are filled with helium to reduce air resistance and enable more platters.

The limiting factor to packing more grains is magnetic physics. There's a trilemma between grain size, thermal stability, and writability.

Smaller grains are good because they increase density. But it's hard to get a magnetic field that is strong enough and small enough to do writes. And they are less thermally stable — they have a tendency to flip orientation easier under thermal fluctuations.

Thermal stability is nice for data integrity, but the same physics that makes the grains more stable also makes them harder to write to — you need a stronger magnetic field which also necessitates larger grains.

Around 18TB drives, manufacturers started facing diminishing returns trying to stack more platters and pack more grains, so they've moved on to more interesting designs.

The first innovation to hit the market was shingled drives (SMR — shingled magnetic recording). SMR drives make the bits overlap some, like shingles. They're able to do this because the write head is actually wider than the read head. Writing requires a stronger magnetic field — you have to really blast those grains — whereas reading can be done more precisely.

In conventional drives, the read head just targets the center of the track made by the write. But if you offset the read and write head, you can lay out the data in a more tightly packed singled pattern.

This gets you about 20% more storage density, but the tradeoff is writing to offset X destroys some data beyond offset X. The drive is divided into zones with gaps between them, and each zone must be written sequentially. Zones are typically 256MB.

So while a conventional hard drive can be thought of essentially as an array of bits you can mutate however you like, an SMR hard drive should be thought of as an array of zones, where each zone has this API:

append(data)

read(offset)

reset()

The lack of ability to write wherever you want is a huge restriction and completely breaks most currently-used filesystems.

You can emulate conventional hard drives simply: whenever you want write to a random offset, just read the whole zone into memory, mutate the buffer, then rewrite the whole zone. This is, of course, insanely wasteful and slow.

Consumer-grade SMR hard drives often ship with firmware that does something a little smarter than this, and will work fine for most normal home computers, but is totally insufficient for a high-performance storage system.

The other major innovation in storage density is energy-assisted magnetic recording (EAMR). These drives are still under active development, but are starting to see use in scaled storage systems. They use lasers (HAMR) or microwaves (MAMR) on the write head to temporarily heat up the grains during a write.

Heating up the grains enables using smaller grains that aren't writable under normal temperatures. The main tradeoff here is complexity — more failures points increases drive failure rate for scaled storage services.

If you want to make an optimized filesystem for HDDs, you have to internalize that they are physical devices with spatial dimension — not a bunch of equally-accessible bits in RAM.

First-byte latency

It takes about 10 milliseconds for the actuator to move the arm from the outer edge of the platter to the inner edge.

A typical HDD spins at 7200 RPM, or about 1 rotation every 8.5ms.

So the best case is the data you want to read is directly beneath the read head — 0 millisecond latency! The worst case is you have to actuate across the whole platter, and when you arrive, you just missed the data swing by, and you have to wait for a whole extra rotation — 18.5ms latency!

So, if you just place data randomly, you can expect about half that latency on average — call it 10ms for simplicity.

Throughput

Once you've paid that latency penalty and started reading, how fast can you read? This is where the physical nature of the drive actually makes a huge impact — the outer edge is moving faster than the inner edge! About 3x faster! And this directly translates to 3x higher throughput!

Schedulers

You can get pretty far with just these numbers, but there's one more trick to juice performance. You can enqueue multiple simultaneous IOs on the hard drive and the firmware will use some variant of the elevator algorithm to serve those IOs in an order that maximizes some utility function — usually throughput.

So if you enqueue IOs at offsets [5, 1, 8, 3, 9] — which might cost 50ms to serve in FIFO order — the algorithm might execute them in the order [5, 3, 1, 8, 9] or perhaps even [1, 3, 5, 8, 9] which might only cost 30ms total due to reduced seek latencies.

Some storage services prefer to optimize for reducing tail latency, so they may use firmware with something closer to FIFO. You can get even more sophisticated by using firmware with some notion of IO priority, so high-priority requests can jump the queue while low-priority requests are served by a throughput-maximizing scheduler.

There is no such thing as an optimal scheduler — it's highly workload-specific.

A note on writes

The above is mostly focusing on reading data. Writes are a little funky because HDDs will typically buffer megabytes of writes on a little memory on the drive and actually sneak them in to the IO queue opportunistically.

For example, if you have a write buffered for offset 5, and you do a read at 2, and then a read at 8, it might sneak in the write on the way between those two reads. Buffering writes also allows merging adjacent writes into a single operation.

You might think this presents a durability risk, but modern enterprise-grade drives can use the existing rotational inertia of the platters plus some capacitors to get the data onto the disk in a power-outage scenario.

SSDs have been taking the consumer world by storm, mainly due to speed. They have less adoption for scaled storage systems because they are ~10x more expensive than HDDs per byte. They still have a place in scaled storage systems as caches — if 1% of your bytes accounts for half of your activity, it will often make sense to offload that hot 1% — we'll talk more about that later.

SSD's main difference vs HDDs is the lack of moving parts — everything is just circuits. From the user's point of view, you can think about it a lot more like an array of bytes, whereas you have to be keenly aware of the physicality of an HDD. That said, SSDs also have some interesting physical limitations that are mostly hidden from the typical user, but the sophisticated user can benefit from knowing more about.

SSDs store bits in cells that hold a certain voltage. In the simplest implementation, a high voltage is a 0 and a low voltage is a 1. But modern SSDs use multiple voltages to store more bits in a cell. With 4 voltage levels, you can store 2 bits: 00, 01, 10, 11. With 8 voltage levels, you can store 3 bits. Modern enterprise SSDs use 16 voltage levels to store 4 bits per cell.

The problem is every time you overwrite a cell, electrons become trapped in the insulating layer of the cell, which slowly degrades the ability to distinguish between all 16 states. After a certain number of cycles, the cell is effectively dead. So the trend of packing more bits per cell is trading off lifespan for storage capacity.

A modern quad-level cell (QLC) may only be writable 1000 times before it dies. This isn't a lot! You could do 1000 writes to a single cell in a second or two with a simple loop. How does anyone use SSDs?!

The answer is that SSDs do a little trick. When you write to offset X, the firmware finds a cell Y that has the lowest number of overwrites and actually stores your data there, then stores an X → Y mapping in a flash translation layer (FTL).

Of course, maintain counters and storing translation mappings on a per cell basis would be impossible — the counter and mapping take up more space than the amount of bits in the cell. So SSDs organize the drive into pages — 16KB on modern drives — that is the minimum unit of IO.

Aside: HDDs also use pages — 4KB each — that we neglected to mention in the HDD section because they are somewhat less pertinent for that discussion.

So you can't just flip a single bit. You'd have to instead read the page into memory, flip the bit in that buffer, then rewrite the whole. And the SSD wouldn't actually write the page in the original location, it would write it in a new location and just update the mapping.

This isn't the end of the story, though. For circuit complexity reasons that I'm not an expert on, each page can't be erased individually. They are organized into blocks that share circuitry for erasing the whole block. Erase blocks are a few megabytes each.

So SSDs are actually remarkably analogous to to the SMR hard drives discussed above, where erase blocks correspond to SMR zones. The main difference is the SSD firmware hides this from users. Consumer SMR drives can also ship with firmware that hides the zoned nature from users, but the performance implications are such that serious users want full control.

So the lifecycle of data on an SSD is something like:

That last step implies something interesting. Those tombstoned pages represent physical storage capacity that isn't referenced. SSDs have more physical capacity than logical capacity to account for the space used by tombstoned pages.

So in the steady state, to write a full block worth of pages, you also have move an old block's un-tombstoned pages first. The ratio of logical pages written vs physical pages written is known as the write amplification.

In practice, most SSDs have around 30% extra capacity for tombstoned data. Because you can harvest the block with the most tombstones, in the best case scenario, there is a block that is 100% tombstones, so you just erase it and write your data with no extra work. The worst case is that every block is exactly 30% tombstones, so to get 1 block worth of space, you have to harvest 3.3 blocks on average.

In practice, the tombstones are not perfectly evenly distributed, so most consumer SSDs will see a write amplification of somewhere between 1 and 3 depending on the workload.

You may have heard that SSDs are not good for small-file workloads. Hopefully now you know why — the 16KB minimum page size combined with write amplification will lead to accelerated wearout of the finite number of write cycles!

Now that we know how the storage media works, we can start using that knowledge to design a filesystem.

Let's sketch out the hardware our custom filesystem is running on. For our purposes, the primary components to think about are main storage (HDD), cache storage (SSD), RAM, and CPU. In reality you'll also probably need to think about network (NIC, TOR) limits as well, but let's ignore network for this article.

Let's design for SMR drives. All the biggest modern hard drives are SMR, and those are the cheapest per byte. If we want to compete in the storage space, we have to stay with the industry trends.

There's a big problem with the trend of drives' getting bigger and bigger every year. The amount of IO the drives can do doesn't change.

Drives today spin once per 8.5ms and take 10ms to sweep the arm across the platter just like they did 10 years ago. So modern drives have the exact same amount maximum of IOs per second as those old drives. The have the same IO budget, despite having more capacity.

It may not seem interesting at first, but this fact is the main driving factor for innovation in the filesystem space.

The whole problem is that workload scales with capacity. Twice as many files, twice as many requests for files. So you have to fit twice as many requests into the same IO budget. To do this, you must be twice as clever.

Minimizing IOs to disk is the name of the game in scaled storage filesystems.

To help minimize HDD IO, we'll try to offload some IO onto some SSD.

SSDs are about 10x more expensive than HDD — too expensive for main storage. But those latest and greatest dense hard drives are a lot cheaper per byte than the older, less dense drives. So if we can spend 10% more on an SSD to enable getting 20% more bytes per dollar on HDDs, that's a good trade.

We probably don't actually need too much RAM. Since HDDs are so slow, it makes the difference in RAM and SSD seem negligible in comparison. So most stuff we might consider storing in RAM, we can store for 10x cheaper on SSD.

The main difference between SSD and RAM worth calling out is endurance. As mentioned in the background section above, SSDs can only be overwritten perhaps 1000 times before they are dead. RAM does not have this constraint. So we still need to be careful about how we use our SSD so we don't kill it too fast!

But we'll still need a few GB of RAM for data movement. Maybe a few gigabytes to cache the 0.01% currently-going-viral files that are too hot for even an SSD. That's about it.

The main thing the CPU does is shuffle data around with memcpys which are super fast — in the realm of 1GB/s per core. Since you might only be able to get 100MB/s off a hard drive on average, a single core can service 10 drives worth of memcpy.

You'll also probably be computing checksums of all that data if you're smart. Checksums are more expensive than copy memory, swag it at 2x more expensive for the cheaper checksum algorithms.

So we land at a calculation of wanting about 1 core per 2-4 disks at max load.

If you're optimizing for extreme performance, you have to know how the hardware works and what your workload is. We've covered hardware, so now let's take a swag at workload. Let's design for a "social media photo/video" type workload.

So most photos are JPEGs, usually pretty highly compressed, about 100KB or so. There's also tiny thumbnail images, higher resolution banner images, and then some videos and can be a lot bigger.

In general, we can think about these file sizes as two cases:

The amount of time we spend between receiving the request and sending the first bytes dominates. This time is spent waiting on other queued IOs to finish, disk seeks, etc.

The amount of time we spend actually sending bytes dominates. This is bottlenecked by how fast we can read from the disk while competing for scarce IO with other requests.

In practice, these cases share some overlap in the 1-8MB range. Such a fuzzy cutoff doesn't matter — we're going to be optimizing for both these workloads — it's just a helpful frame to understand what we're optimizing.

Don't use a garbage collected language. Even garbage collected languages that claim to have ultra-fast GC algorithms like Golang will occasionally see GC pauses of >10ms. A serious storage server will be seeing thousands of requests per second that will cause longer pauses. These longer pauses will dominate your 99th percentile first byte latency.

Dropbox wrote their first storage server in Go, and then later rewrote it in Rust. S3's first storage server was in Java, and then was rewritten in Rust. C++ would be a fine choice if you already know C++. But if you don't know C++ already, Rust probably has fewer ways to shoot yourself in the foot and accidentally corrupt data or crash.

One rotation around the disk is a few MB (depends a bit on what radius offset you're at). So if you were to read a 16MB file in one big IO, that would mean waiting for the disk to spin around say, 6 times. That's 50ms! That means any other requests that come in during this time will have to wait at least 50ms.

It's better to split the IOs up into smaller chunks, so you can interleave requests. E.g. serve the first half of a large file, then serve a request for a different small file, then return to the big file for the second half.

You'll see this kind of throughput vs latency tradeoff appear in many systems, but it's everywhere in storage.

It takes ~10ms to move the head from the inner edge of the platter to the outer edge. So we can minimize the probability we have to do this by placing the most popular data midway between the inner and outer edges of the platter. Then, most of our excursions will only be left or right of the middle — half the distance, half the time.

Note that half the offsets isn't the middle radius, because the outer section has a higher area than the inner section. It's more like 30% of the bytes will be inside the middle radius, and 70% outside.

This method works best if you to have some inductive bias about how popular a file will be. E.g. logs_2011_05_23.txt.gz? Probably not popular. cool_meme.jpeg? More likely.

Even without an inductive bias, you can still use background cycles to move popular data to the middle. But if you're building a custom filesystem, you probably have better than a guess about your workload.

This is another one to try to reduce time spent moving the arm, but for this one, where the data doesn't matter, just as long as it's near the right neighbors.

E.g. try to store vacation1.png next to vacation2.png, because if someone opens their photos, they'll fetch both files in sequence. Or if you're implementing a POSIX filesystem, put files from the same folder nearby. That kind of thing.

This directly contradicts the advice for minimizing first-byte latency. But it's true! You don't want to do big IOs to minimize first-byte latency. But you really want big IOs to maximize throughput.

Luckily, there's a knee in the curve around the 1-4MB IO size threshold. If you really care about first-byte latency because you get a lot of requests for small files, err towards 1MB max IO size. If you are willing to sacrifice a bit of first-byte latency for throughput, go up to 4MB.

You get really diminishing improvements to first-byte latency below 1MB, and to throughput beyond 4MB.

If your filesystem is backing a storage server, it's sending files over the network in response to requests. Sending big files over the network takes non-zero amount of time. While one thread sends data over the socket, another thread should be doing IO to the hard drive in parallel. You might even have a 3rd thread doing file integrity checksumming or decryption or some other compute task in the middle.

An alternative to explicit threading is to use some async ecosystem to accomplish the same thing. That said, the request rates of HDD-backed storage servers can never be high enough to need the other performance benefits of async programming paradigms, so consider that when deciding on threads vs async.

The maximum amount of requests you can expect to serve is 120 per disk per second. Because the disk spins 120 times per second, you only have an opportunity to serve a request once per revolution. So even with 100 disks, modern linux can manage 10,000 threads just fine.

Filesystems have a lot of minutia if you zoom in enough. Before we get to that, let's stay zoomed out and just think about some high-level data layout. After all, the file data itself will constitute more than 99% of the disk space, and the metadata less than 1%. So let's focus on the 99% first, even though the 1% holds a lot of complexity.

Here's a little diagram showing an SMR hard drive. The sections represent the 256MB zones we described above.

Let's insert some files in one of the zones. Each colored block represents a file.

Let's keep adding files. And also let's delete some files, represented with the red X marks. Remember that SMR zones can't be overwritten in-place, so we can't just re-use that space for the new files. We have to keep appending to a new zone.

So how can we actually get that deleted space back? We have to run some kind of compaction process. Let's copy the remaining files to a new home.

Now that they are copied, we can clear out the original zone to get that space back.

So this is what SMR drives force upon us. Note that this is remarkably similar to how SSDs work internally. It copies still-reference pages from a source erase block to a destination erase block, then erases the source block.

Also note that this process is kind of reminiscent of the defragmentation process you used to have to run on your laptop back in the 90s and early 2000s to optimize disk space.

Those drives weren't SMR, but even non-SMR drives have a similar problem: deleted data creates holes that must be filled, and you can never fill them perfectly! Eventually you have a bunch of tiny gaps that waste space. The tiny gaps build up until the sum total of wasted space is non-trivial!

So you run a defragmenter. You'd hear the hard drive clicking for a few minutes while the progress bar moved slowly. It was doing a process kind of like this! Moving files around into a more compact form.

This point is important. All storage systems pay some cost to handle deleted data. It's either explicit, like running a defragmenter, or implicit, like how SSDs move and remap data internally. For SMR drives, we really want to do it explicitly so we have full control. SSDs may have abundant IO budgets, but HDDs do not, we need to squeeze out every bit we can!

Handling data deletion via compaction will inform a lot of our design. Specifically, questions we need to confront are:

Compaction IO & space waste

Points 1 and 2 are actually related. If we let 20% of the disk be wasted with deleted bytes, on average, each zone will be 20% deleted. So to get 1 zone worth of free space, we need to run compaction on 5 zones. This means our write amplification would be 5. For every 1MB of new files, you have to — on average — compact 5MB of old files.

This is actually too pessimistic, because in reality, while the average amount of deleted bytes in a zone is 20%, they are not evenly distributed across all zones. Think about it. The zone you just compacted and filled with fresh data will be 0% deleted. The zone you just compacted and filled with data yesterday might only be 1% deleted. The longer ago a zone was filled, the more time it has had to accumulate deletes.

The cheapest zone to compact is the one with the most deleted bytes. Because the amount of work you have to do is a function of the non-deleted bytes. In the extreme, if a zone is 100% deleted, you can just clear it with no work at all.

So if deletes come in randomly, and you always harvest the zone with the most deletes, you'll actually wind up with a distribution of deleted data in which the zone you just compacted has 0% deletes, and the zone you are just about to compact has 2x the average, and all the other zones are linearly interpolated between those two extremes.

So the average is still the same, but the write amplification is actually half of what you'd get if the deletes were spread totally evenly. Happy news for us, since that means compaction will take half as much IO as we might have initially thought.

Additional compaction improvements

You can do even better than the happy news about the distribution above. That distribution assumes deletes come in randomly. But this isn't true for most workloads!

In reality, deletes have a lot of correlations. Whole folders get deleted at once. Log files that get deleted after an expiration date. Even if you know nothing about the file, you can assume at any time, a file is at the midpoint of its life, so its expected delete day is its current age times 2 — this is known as Lindy's law.

Let's call a file's delete time its death day. To minimize compaction work, you really want to put files with similar death days in the same zones. If you were a god with perfect foresight, you could get your compaction IO down to nearly zero. As we are not gods, we will have to settle for whatever marginal improvements our approximations buy us.

You have a few opportunities to do this clever data placement. The first and obvious opportunity is during the initial file creation. But compaction itself gives you another opportunity. Every time you compact a zone, you have to move those files somewhere. You might as well move them intelligently.

Compaction also gives an opportunity to do other fun stuff.

You'll want to perform checksum integrity checks to catch any bitflips that might have happened to the data at rest.

You can also try to move the data into performance-enhancing locations like we described above — popular data to the middle of the platter, big stuff to the outer-rim, etc.

How much space do we waste?

Above I threw out the number 20%. Let 20% of the disk be consumed by deleted files waiting to be compacted.

But wait… SMR drives only get us a 20% capacity improvement over conventional HDDs. So we probably don't want to burn all those gains to support the compaction process that SMR makes us do. Otherwise, we'd be better off just using a conventional HDD and doing a more traditional defragmentation process.

So instead let's use 10%. SMR gets us 20% more capacity, and we use half that extra capacity to support the compaction process that SMR forces us to run. So we still come out 10% ahead.

This means our worst-case write amplification will be about 10, but remember that the worst case isn't realistic. A realistic delete distribution gets us down to 5, and applying some of those more clever heuristics about death days might get us even lower, maybe down to 3 or so.

It's worth noting that write amplification asymptotically approaches infinity as you get closer to 0% space waste. If instead of 10% waste, we chose 5%, our write amplification would increase 2x, or if we chose 1%, it would increase 10x relative to the original 10%.

Where you set this value depends a bit on your workload — you just want to set it as low as you can without the IO it causes to interfere with normal requests. And if you can't set it below 20%, you're better off not using SMR drives and all the headaches they bring.

Keeping track of everything

So we've got all these files, and they are constantly getting moved around. How do we keep track of all this?

All filesystems have a datastructure called the index. The index keeps the mapping from filename to file location. It'll also often have metadata like permissions, create/modified dates, etc.

We'll talk more about the index below, but for just the discussion about compaction, the main salient point is that the majority of index updates happen due to compaction.

You might think that the main cause of index updates is files getting created and deleted. But in a compaction regime, with a write amplification of 5, for every 1 file you create, you have to move 5 files! This entails updating the index to point to the new location.

As we mentioned above, the index is the data structure that has the mapping from filename to file location on the disk.

First, let's talk scale. The index is some data. How big is that data? Let's say each filename is 16 bytes on average. The disk location is another 8 bytes. So each value in the index (inode in filesystem parlance) is 24 bytes total. If we have a 16TB HDD, and the average file size is 64KB, that's 256 million files to keep track of. So our index would be 6GB.

6GB is tiny compared to the 16TB of data it indexes. We can trivially fit this thing into RAM, right? My phone has 6GB of RAM. Not so fast! Remember in the hardware section above we said 1 CPU core has enough power for about 4 hard drives? This implies our storage node probably has a bunch of hard drives to support.

If we have an server grade CPU with 16 cores, we might have around 64 hard drives. So the sum total index size is closer to 400GB! That's no longer a trivial amount of RAM — several thousand $ worth. Still not a lot compared to the cost of the disks — maybe 5% of the total server cost — but not nothing either!

We can do better. Instead of using RAM, let's use SSD to store the index. It's 10x cheaper, and still mega-fast (compared to HDD).

What's the structure of the index? You hear "mapping" and your first thought is probably a hash table.

Hash table blues

There's a few reason why a hash table won't work.

The simplest is that hash tables don't allow us to efficiently implement filename-ordered list. You really want filename-ordered list to implement most useful services, or things like a POSIX filesystem.

There's also a more subtle reason. Recall that in the hardware background section, I mentioned that each SSD cell can only be overwritten a finite number of times — around 1000 overwrites. A naive hash table implementation would do 1 page overwrite for every index update — you can't batch them together either, because hashing means they'll be scattered randomly across the whole SSD.

How bad is this? Let's say your disks are getting 20 new files per second each. That means in the steady state, you're also doing 20 deletes per second. And we have to take into account the index updates due to compaction. All in, we're at about 100 updates per second per disk. So 6400 page writes per second across our 64 disks.

Since each page — the minimum unit of SSD IO — is 16KB, that's about 100MB/s, so it's within the capability of the SSD to do that much — but how long will the SSD live? How long until we burn through each page's 1000 lives?

Let's say we have a 512GB SSD. That's 32 million pages. Each can be overwritten 1000 times. So how long until we burn through 32 billion page writes with our naive hash table? Only 2 months at 6400 writes per second.

That's not good! Most storage systems want their hardware to last 5 years or so to be economical.

Search trees, a new hope?

So the first problem with hash tables was the lack of ordered traversal. You hear "ordered mapping" and you, a computer science whiz, think of a search tree.

Can search trees also help us with our SSD endurance problem too? We saw with hash tables that mutating nodes in-place is problematic, because nodes can be stored all over the disk! We'd really like a way to batch mutations together, and in-place mutation is antithetical to that.

If you're a functional programmer, your spidey-sense should be tingling right now. Trees? Mutation bad? This sounds like a job for… immutable datastructures!

For the non-functional programmers, the basic idea is that to do a mutation, you don't mutate the node in-place. Instead, you allocate a whole new node and update some pointers.

Suppose you had this simple tree:

You want to mutate D. So to do this, you have to allocate a new node, call it D2, and update some pointers:

Functional programmers will be shaking their heads right now. Why? Because the picture above is actually incorrect. We mutated B in-place! And mutations aren't allowed. So we have to do to B what we did to D. Allocate a new B2, and that thing points to the new D2. But who points to B2? We have to allocate a new A2!

So C, F, and E are unchanged, but we had to allocate all the way up the trunk of the tree back to the root.

You might think "this sounds like a lot of extra work" — and it is more work in the abstract compared to a hash table. You have to update O(log(N)) nodes instead of O(1) nodes. But the magical part is that these new nodes can all be written out together on disk.

Since the new nodes are just little bits of data and pointers, they could be anywhere on that SSD. We might as well put them together, so the IO can be batched. It was those 6400 random writes per second that killed our SSD. This technique can make the writes non-random.

Ok, so search trees have some nice properties. Let's dig into the details.

Lookups

How fast are lookups? We have 64 disks with 256 million files each, so 16 billion total files. A binary search tree would be 34 nodes deep. SSDs are really fast, about 100 microseconds per lookup, so that would be 3.4ms per lookup. Not awful, but we can do better.

What if instead of a binary tree, we used a 256-ary tree. That would only be 5 levels deep. 500 micros! Way better — a rounding error when compared to HDD IO times.

Trees also have the nice property that the upper layers are exponentially smaller. In a 256-array tree, each layer is 256x smaller than the layer below it. So for a 512GB bottom layer, the layer above that is only 2GB! That's small enough to easily fit in RAM!

So really, we can cache all but the bottom layer of the index in RAM — our 500 micros just became 100 micros. Practically nothing in HDD-land.

Updates

Time to do the dismal math. How fast will we kill this drive? 6400 updates per second. Each update is the 24 byte node, but the bulk is now the trunk rewrite we have to do.

In a 256-ary tree, each inner node is in the realm of 256*24=6KB, and we have to do 4 levels of inner node, 24KB. This is bigger than the 16KB page we had to rewrite for the hash table implementation! Are we cooked?!

Not so fast. Maybe we can amortize some of this. We're doing 6400 updates per second. All of those updates share the same root node. And each of the 256 nodes in the 2nd layer will get 25 updates each. Then it becomes more diffuse with ~1 update per branch.

So we'd still have about 6400 4th and 3rd layer updates, but the top 2 layers get amortized down to zero. So we've cut our IO in half by just buffering 1 second worth of updates.

If we buffer for longer, say, 5 minutes, we can amortize another layer, cutting our IO in half again.

Unfortunately this still isn't enough! Even if we're lucky, our SSD will only last for a year or so at this rate.

Log-structured merge trees

A log-structured merge tree is a datastructure specifically designed for on-disk, high-insert workloads. This sounds perfect!

The key idea is you maintain multiple layers of tree. You do mutations into the top layer. When that layer grows too big, you do one big bulk merge of it to the next layer. When that layer grows too big, you do an even bigger bulk merge into the next layer. Etc. So you're getting maximum amortization.

There are a lot of LSMT variants, so let's just dive into building one suited for our workload. Like B+ trees, LSMTs store leaf nodes as blocks of contiguous values.

So rather than each leaf node being a single key-value pair, it's a decent sized block of pairs. Let's pick 16KB as an arbitrary block size. To do a lookup, we'll need to fetch the entire 16KB into memory and then binary search that buffer. This isn't a big deal, CPUs are fast!

Let's sanity check the SSD bandwidth. If we guessed our drives will get 20 file creates per second, let's say they'll get 40 file reads per second. That's 2500 reads across all 64 drives. So if each of those requires fetching 16KB from the SSD, that's 40MB/s — well within the SSD's capability.

Ok, so we have these 16KB blocks. How many do we have? If each inode is 24 bytes, each block will hold about 700 entries. We have 16 billion files across all the disks. This means at a minimum, we'll have about 25 million blocks.

For each block, we really just need to know the first filename on it and where it is on the SSD. So this actually isn't that much data! Keeping track of the first filename and location of every block would only cost about 3GB of RAM. That's affordable.

So, we can do the reads, we can track the metadata. And this is just a big group of blocks. Where's the complexity?

Inserts, of course. How do we mutate these blocks? We can't rewrite a block every time we need to do a mutation. That's exactly what busted us with our previous ideas.

Can buffering and amortization save us? If we buffer 5 minutes worth of mutations, that's about 2 million mutations. Spread cross 25 million blocks. Not a lot of amortization, sadly!

What if, rather than flushing the buffer to the main set of blocks, we flush it to a whole new set of blocks. This new layer of blocks will conceptually live "on top of" the other. When we do queries, we check the top level first, and only fall through to the bottom level if we don't find what we're looking for.

So we take our 2 million mutations we've accumulated over the last 5 minutes, and pack them into a tidy 3000 new blocks. Much smaller than 25 million blocks! Only costs 3000 page writes — amortized over 5 minutes. Only 165KB/s. Basically nothing. Our SSD will live forever at that rate!

Now when we do lookups, we first check the top layer, then the bottom layer if we have to. Double the lookups, but SSDs are fast!

5 more minutes pass. We've accumulated 2 million more mutations. What do we do? Stack another layer on top again? This will get out of hand pretty quickly.

What if we instead merged our new 2 million mutations into that small top layer we have? We produce a new top layer of 4 million entries. 6000 blocks to write. Still very cheap.

We do this 10 more times. Now, to merge in our 5 minutes worth of mutations, we have to rewrite 60,000 blocks! If we keep this up, we'll be back where we started, rewriting every block per 1 mutation.

Here's an idea: what if we add another layer on top? A 3-layer tree. But after a few more hours, our new top layer is the same size as our middle layer! We've hit the same problem!

But here's the fun part. Now let's merge those two layers in one big operation. Now we can make a brand new tiny top layer!

Hopefully you see the generalization of this process. You have your very top level RAM buffer — 5 minutes worth of mutations is what we've been working with, 2 million entries.

The first layer on SSD is the same size as that thing — 3000 blocks. When you go to flush 2 million more entries, you do a big rolling merge, creating a 6000 block layer. If there is a 6000 block layer below, you include it in the rolling merge, producing a 12000 block layer. All the way down.

So the max size of this tree is when it has the layer sizes 2M, 4M, 8M, 16M… 4B, 8B, 16B.

That's 13 layers, and we'll need double the SSD capacity as we initially thought to hold it all. SSD is cheap compared to RAM, so we still come out way ahead.

How is it on the SSD lifetime front? Each 24 byte entry will get rewritten 13 times (one for each layer) while it makes its way down. So that's 312 bytes of writes per mutation. 6400 mutations per second. That's only 2MB/s on average!

With a 1TB SSD, that's 6 days to fill it once. So it'll take 6000 days to use up all its lives — 16 years! We're golden.

But we've introduced a new nasty problem. 13 levels. Each file read needs to check each layer in order — and 50% of reads will go down to the bottom layer. That's a lot of IO, and 1.3ms of time on average. Can we do better?

Of course. We'll just stick a Bloom filter on each layer. A 10% false positive rate will get the number of accesses from 13 down to 2 or so.

Have we totally solved it? Are we done?

There's one more hickup. We have 5 minutes worth of buffered index mutations sitting around in RAM. What happens if we lose power or crash for some reason? We just lose all that data? That probably isn't acceptable.

The solution is a journal. This is a place you just write all the mutations as they come in with a dumb, flat format. Whenever you flush the main buffer to the LSMT, you can prune the journal.

If you lose power, on startup, you just replay the journal. You can also store the journal on SSD. It's small and only increases the IO by 1 more copy per entry — about 5%.